Accuracy

The capacity of an instrument to deliver measurements that are reasonably close to the actual value of the quantity being measured is referred to as accuracy. Usually, it is stated as a percentage or as a number with a certain number of digits.

Simple formula for determining accuracy

Accuracy is calculated by

Acc = [(Measured Value – True Value)/True Value] x 100

Degree of Accuracy

In general, the degree of accuracy is 0.5 units on either side of the unit of measurement.

Examples

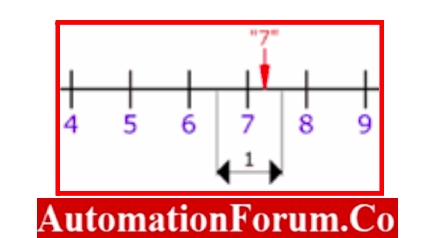

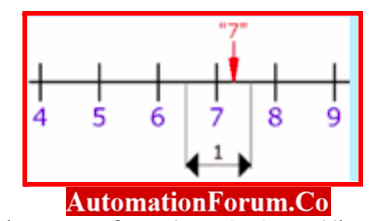

Any value between 6 1/2 and 7 1/2 is measured as a “7” when your instrument measures in “1”s.

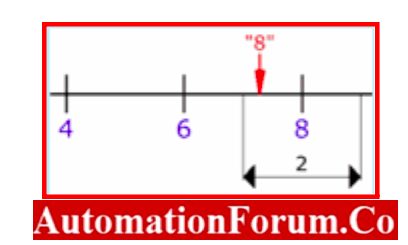

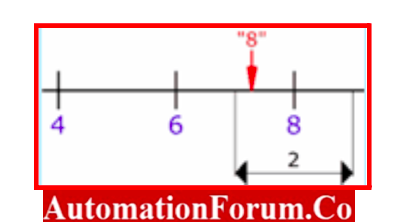

Similarly, any value between 7 and 9 is measured as “8”, when your instrument measures in “2”s.

Plus or Minus

By utilising the “Plus or Minus” sign, we may demonstrate the error

When the value might range from 6 1/2 to 7 1/2 (i.e,) 7 ±0.5 and error is 0.5.

Whenever the value might range from 7 to 9, 8 ± 1 The error is 1

Instrumentation Error Specifications with accuracy specification

- The level of uncertainty present in a measurement produced by a certain instrument under a specific set of environmental or other qualifying conditions is represented by an accuracy specification.

- The accuracy specification is typically thought of as the highest level of performance that any sample of the product line is capable of meeting.

- Accuracy is often expressed as (gain error + offset error).

- Comparing instruments can be challenging since manufacturers can use a variety of formats to express accuracy.

- Sometimes a specification that only specifies accuracy in terms of A/D counts or parts per million also includes gain and offset mistakes.

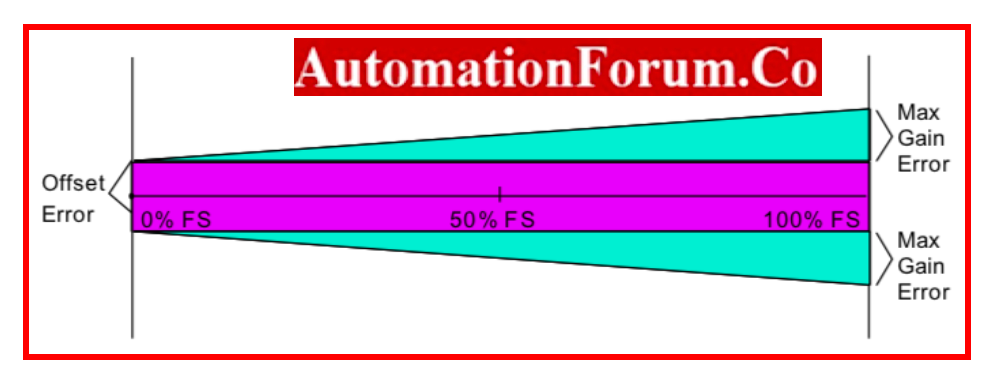

- The relationship between the gain and offset error components throughout a measurement range is depicted in the graph below.

- The offset error is the prominent term when the instrument is operated in the lower portion of a chosen range. The gain error increases when the selected range is operated close to the full-scale value.

Uncertainty vs. Reading % of full scale

- Parts per million (ppm), percent, or counts are just a few of the different ways that instrument specifications can be expressed. When numbers are modest in size, ppm is used instead of percent (10000 ppm = 1%).

Accuracy Specification in Data sheet of Instruments

- The data sheet’s accuracy standard typically solely accounts for measurement uncertainty caused by the hardware.

- Variations in the production of electronics components and subassemblies will always have an impact on the hardware.

- The main causes of these variances are A/D converters, resistors, and amplifiers.

- The requirements also contain a time period, such as a 24-hour, 90-day, etc., from the most recent calibration over which the specification is applicable. An out-of-spec reading could be caused by hardware that is flawed but hasn’t completely failed.

- This will be reduced with the use of calibration cycles for test and measurement instruments; skilled calibration professionals can identify the requirement for anomalous adjustment to put an instrument back into calibration.

- However, extraneous sources of inaccuracy, such as noise from power lines (50/60 Hz), lead resistance, magnetic interference, ground loops, thermoelectric EMFs, and RF, can also affect measurements.

- Test procedures must contain safeguards against these sources of mistake as they are not mentioned in accuracy criteria

Factors that may impact an instrument’s accuracy include:

1. Calibration

- To achieve accurate measurements, proper calibration is necessary. Measurement mistakes may result from improper or infrequent calibration of an instrument.

2. Environmental Factors

- Accuracy may be impacted by the operational environment. Measurement errors can be caused by elements like temperature, humidity, pressure, and electromagnetic interference.

3. Stability

- An instrument’s stability is defined as its capacity to sustain accurate measurements over time. Stability may be impacted by elements such component ageing, temperature changes, and external factors.

4. Linearity

- The degree to which an instrument’s response conforms to a straight line across its working range is referred to as linearity. When measuring close to the upper or lower boundaries of the instrument’s range, nonlinearities can induce mistakes.

5. Sensitivity

- An instrument’s sensitivity determines its capacity to identify minute variations in the quantity being measured. Since it can detect minor differences, more sensitivity often results in greater accuracy.

6. Resolution

- The smallest increment an instrument can detect or display is known as resolution. More accurate measurements may result from the use of instruments with higher resolution.

7. Interference

- The precision of electronic equipment can be impacted by interference from external sources, such as electromagnetic fields or electrical noise.

- Effective shielding and grounding methods can reduce the impact of interference.

8. Instrument Limitations

- Measurement range, resolution, linearity, and sensitivity are only a few of the restrictions that each instrument has.

- For reliable measurements, it is essential to recognize and take into account certain constraints.

9. Human Factors

- Inaccuracies can be introduced by human errors, such as inappropriate technique, reading mistakes, or wrong data entry.

- Human error can be reduced with the use of adequate training, standardised procedures, and systems for double-checking.

10. Signal-to-Noise Ratio

- Inaccurate measurements can result from a poor signal-to-noise ratio.

- Instruments that generate a lot of noise relative to the signal being monitored may not be as accurate.

11. Sampling Rate

- A low sampling rate might lead to erroneous readings or aliasing in applications where signals change quickly.

- To accurately capture and represent the desired signal, there must be a sufficient sampling rate.

12. Instrument Drift

- Over time, instrument drift may occur, resulting in inaccurate measurements.

- Accuracy can be maintained and drift corrected with routine calibration and verification.